The Delphi method: A powerful tool for group decision-making

What is the Delphi method?

The Delphi method, also known as the Delphi technique or process, is a consensus-based decision-making framework involving repeated rounds of surveys and reflection by a group or panel of experts. It is usually used in situations involving a need for consensus between different perspectives.

Also known as the estimate-talk-estimate (ETE) technique, Delphi is popular for decisions based on qualitative variables or incomplete evidence, and therefore individuals’ judgments based on their expert knowledge and preferences are relied on instead.

The Delphi technique was developed by Project RAND in the early stages of the Cold War in the 1950s for forecasting the impact of technology on warfare. Delphi has since been adapted for a wide variety of applications, ranging from health care to business to government policy-making and more.

Understanding the Delphi method

The Delphi technique uses questionnaires or surveys to elicit group preferences, usually from a panel of experts.

Delphi surveys are usually administered anonymously to avoid group biases, such as groupthink and group polarization: where participants may hesitate to give dissenting opinions to conform with the group, and where group opinion may be skewed towards extreme positions.

The Delphi technique is particularly useful when:

- Problems are complex and may lack historical data or evidence, and so an alternate way to gather information is needed

- Opinions from experts with diverse backgrounds or expertise are sought

- Decision-making needs to be transparent, defensible and potentially auditable

How it works

A Delphi study typically involves several rounds of questionnaires or surveys sent to selected participating experts. A facilitator (or coordinator) manages the process so that participant responses remain anonymous to each other and feedback loops are controlled.

After each survey round, the facilitator summarizes the group’s responses and gives this summary back to the participants, often including statistics like average ratings and ranges alongside curated comments.

The experts are then asked to refine their answers in light of the group feedback. This process is repeated until sufficient agreement is reached.

The Delphi method’s power lies in its combination of anonymity, iteration and controlled feedback, which together reduce the effects of group biases. The goal is to reach group consensus and build better understanding between group members.

Delphi method advantages and disadvantages

The Delphi method can be very useful in group decision-making situations where many qualitative variables are involved. However, like any method, it has its advantages and disadvantages.

Advantages

The Delphi method draws on collective intelligence and diverse expertise for decision-making. Delphi’s advantages include:

- Reduced bias: Because responses are gathered anonymously, ideas are judged on merit rather than on the authority or personality of contributors. Anonymity encourages honest input and reduces group biases.

- Flexibility: Delphi is conducted via surveys, enabling experts across different locations to provide input in their own time. This approach allows a rich variety of perspectives to be gathered without the logistical constraints of in-person meetings, if needed.

- Iterative consensus-building: The repeated rounds with feedback enable participants to refine their thinking and build consensus.

- Transparency: The Delphi technique produces a record of how consensus was reached, including the reasoning behind expert judgments. This transparency can be useful for justifying decisions, such as in policy-making or developing clinical guidelines.

- Applicability to complex problems: When objective data is lacking or the problem is multifaceted, Delphi provides a systematic yet flexible way to synthesize informed opinions. Thanks to this structured flexibility, Delphi has been used for a very wide range of applications – from predicting future technologies to prioritizing patients for health care.

Disadvantages

The Delphi method also has some drawbacks, including:

- Requires preparation: The Delphi method requires thorough preparation and multiple rounds of surveys and feedback in participants’ own time. Depending on the context, reaching a decision can take anywhere from a few hours to several weeks or even months.

- Requires diligence: Delphi requires careful planning, design and consolidation of expert feedback. This can be complicated without a skilled facilitator.

- Not suited for everyday decision-making: Delphi can be a powerful tool for big decisions, but it’s less suitable for everyday decisions that need to be made more quickly and where expert consensus is unnecessary.

- Need for engaged experts: The Delphi technique relies on engaged experts and key decision-makers to reach decisions – who tend to be very busy people, and may not be interested in multiple rounds of feedback.

Many of these challenges can be mitigated by using 1000minds decision-making software which offers surveys and tools to streamline the Delphi process – making Delphi easier, faster and more transparent. An added benefit is that 1000minds also provides real-time analysis and communication of results.

The Delphi process

The Delphi process involves a series of stages or rounds until a decision outcome is reached. The following Delphi method steps are typically included.

1. Problem structuring

Clarify all aspects of the issue at hand:

- What is the problem you are trying to solve?

- Who are the stakeholders affected?

- What are the goals you want to achieve?

The problem could be a broad issue – for example, “What are the top 10 risks facing our industry in the next 5 years?” – or a set of specific questions.

2. Panel selection

Choose a group of participants with relevant knowledge and experience to participate in your Delphi process.

Delphi panel sizes can vary, ranging from half a dozen to 50 people, depending on the topic and the need for diverse inputs. Make sure the panel is sufficiently diverse to cover different viewpoints and that all members have insights into the problem being addressed.

Assure participants that their responses will be anonymous so that they feel safe to express their honest opinions without fear of judgment. Also designate a facilitator to lead the process; this could be yourself or someone who understands the Delphi method, ideally with domain expertise.

3. Round 0: Initial survey

The facilitator sends out a survey to the group of experts, usually comprising open-ended questions to gather broad ideas, issues or possible solutions.

For example, if your goal is to identify criteria for evaluating a policy, the first-round question might be: “What criteria do you think are most important for assessing this policy’s success?”

The experts are asked to independently comment on each topic in the survey based on their subject-matter expert knowledge and preferences. Experts provide their answers individually and privately, which are returned to the facilitator, who compiles them.

All input at this stage should be independent and anonymous: panelists do not interact with each other, enabling independent thinking. This anonymity encourages people to share controversial or creative ideas, yielding a deep and bountiful pool of viewpoints.

4. Round 1: Feedback and convergence

The facilitator summarizes the collected responses and shares them with the group for further feedback. Each expert can see the group perspective and then add to it or answer the next set of questions.

The summary and subsequent round may take different forms depending on Round 0. For example:

- If Round 0 produced a list of items, Round 1 might ask panelists to rank or rate them.

- If Round 0 collected open-ended opinions about trends, Round 1 might ask panelists to comment on the collective feedback or reconsider their initial positions.

This feedback loop is central to Delphi’s consensus-building: it challenges experts’ thinking with peer input while avoiding direct confrontations. By the end of Round 1, you are likely to start to see some convergence of opinions.

5. Round 2: Revision and further iteration

Responses from Round 1 are summarized, highlighting areas of disagreement or the current level of consensus for each item. This summary is sent back for another round to further narrow differences.

Panelists can revise their responses according to the group commentary, or provide another rating, ranking or comment based on the feedback. Aggregating responses and redistributing them continues until the facilitator feels sufficient consensus has been reached. This usually takes about 2–4 rounds.

6. Final analysis and consensus

The Delphi process concludes when sufficient consensus has been reached. Define beforehand what “sufficient consensus” means (e.g., a percent agreement or a narrow interquartile range).

Even if full consensus isn’t reached, the final results clarify the degree of agreement and the remaining spread of opinion. The facilitator summarizes the findings (for example, a consensus statement, a prioritized list, or forecasts with probabilities) and shares the summary with the panel and other stakeholders. At this point anonymity can be lifted if desired; sometimes panelists are brought together for a concluding discussion, but that is optional. The synthesized results are then used to make a final decision.

Throughout all rounds of the Delphi method, anonymity and iteration are crucial. Panel members only see anonymized individual responses or aggregated information (such as statistical summaries or thematic syntheses), but never experts’ responses attributed by name. This ensures ideas from junior or quiet participants carry as much weight as those from senior or outspoken individuals, supporting merit-based evaluation of ideas.

The iterative design with multiple rounds gives participants ample opportunities to reflect on their opinions in the light of feedback, which is key to gradually building consensus.

Modifying the Delphi process to your needs

The full Delphi process outlined above – sometimes referred to as “traditional” or “classical” Delphi – can be easily adapted or modified to suit your particular goals and needs.

Modifying the Delphi process – “modified” Delphi – usually involves one or more of these changes:

- Instead of starting with open-ended questions, a modified Delphi may begin by presenting panelists an array of existing evidence, objectives or alternatives which are refined and built upon in subsequent Delphi rounds.

- There may be fewer rounds of feedback and a smaller number of panelists compared to traditional Delphi.

- In between anonymous questionnaires, an in-person discussion round may be added.

Even with such changes, the modified Delphi technique retains the traditional Delphi method’s core strengths of iterative feedback and anonymized input. Modified Delphi pragmatically adapts the process while preserving traditional Delphi’s core strengths.

Whether you run a traditional Delphi or modified Delphi, the goal is the same: to capture the collective intelligence of a group in a fair, systematic and transparent way.

Whereas traditional Delphi is more exploratory and in-depth, modified Delphi tends to be faster and easier to implement. Therefore, using a modified Delphi approach is more common in applied fields like health care, business and government policy-making where timely, actionable consensus is important.

Depending on the application, you might also modify the Delphi process to be more comprehensive and gain deeper insights into a topic. For example, incorporating in-person or virtual discussions after anonymous rounds helps add rich dialogue and builds better understanding of the topic under consideration.

Delphi method examples and real-world applications

Although the Delphi method was originally developed for military forecasting, it is now used across a wide range of disciplines. Here are three examples of how the Delphi technique can be applied to real-world situations.

Health care policy consensus

Imagine a national health ministry that wants to develop guidelines for telemedicine use. It convenes a Delphi panel of doctors, nurses, patients and health administrators to determine the most important criteria for evaluating and prioritizing telemedicine services available.

In Round 0 of the Delphi process, an online survey is used to elicit dozens of suggested criteria and related concerns from panelists. Subsequent rounds focus on narrowing down and weighting the most important criteria – for example, using a method like PAPRIKA.

The final result is a set of criteria and weights for prioritizing telemedicine services offered by the health system. The strength of using Delphi is that the final decisions have buy-in from all stakeholder groups, because the process captured everyone’s input fairly and transparently. Policy-makers can have confidence that decisions reflect participants’ preferences.

Business strategic planning

A company might use Delphi to forecast market trends or prioritize investments. For example, a tech company seeking to identify the “next big things” to focus on over the next decade can convene a Delphi panel of experts in technology, consumer behavior and economics.

Over multiple rounds the experts list emerging technologies and estimate their likely impact; each round they refine forecasts based on panel feedback. The company can aggregate these forecasts and score each technology on criteria such as impact, likelihood and risk – producing outputs such as a risk–opportunity matrix to guide R&D investment decisions.

Delphi provides a structured, consensus-based framework for strategic planning and a documented rationale for why particular investments were prioritized.

Government policy-making

Imagine a national police organization that wants to create a 5-year plan for resource allocation based on likely future crime developments. Because precise modelling of future trends is impossible, experts’ judgments substitute for hard data.

The organization gathers a panel of subject-matter experts (policy analysts, criminologists, social scientists) who, through rounds of questionnaires and feedback, provide input on possible crime developments, their likelihoods and influencing factors. Consensus yields a ranked list of the most likely developments and influencing factors.

This prioritized list can be used for strategic planning and preparedness. An added benefit is that Delphi produces a transparent, auditable process that builds trust in government planning and decision-making.

1000minds surveys and tools to support Delphi processes

Implementing the Delphi technique (both traditional and modified) can often be complex, time-consuming and labor intensive.

Fortunately, Delphi can be made easier, as well as more valid, efficient and transparent, using 1000minds software.

The main Delphi tasks supported by 1000minds surveys and tools are summarized in the following table.

| Delphi task | 1000minds |

|---|---|

| Anonymous collection of panelists’ inputs in Delphi rounds |

Available survey types:

|

| Custom survey questioning | Gather qualitative and open‑ended inputs as part of 1000minds surveys. |

| Preference elicitation and consensus building | Panelists’ preferences elicited via simple pairwise comparison questions; supports group decision‑making with anonymous voting, discussion and consensus. |

| Analytics and progress monitoring | Automated real‑time charts and summaries and panelists’ progress reporting during Delphi rounds. |

| Process data management and documentation | Data and progress are stored with auditable logs, ensuring transparency and easy revisions if desired. |

The three types of 1000minds survey mentioned in the table – ranking, categorization and preferences – are useful for gathering their respective kinds of information for which they are designed. As well as individual results for each participant and average results for the group, these surveys can be used for group decision-making and reaching consensus.

In addition, all three surveys are useful for revealing the extent to which participants agree and disagree respectively – their judgmental variability – when engaging in the particular judgmental activity in the survey. As discussed in the next section, these surveys, also known as noise audits, are a great way to start a Delphi process and engage panelists.

These three surveys and other 1000minds tools referred to in the table, including anonymous voting, are now discussed in more detail.

Revealing agreements and disagreements with 1000minds “noise audits”

Two types of noise audit are supported by 1000minds ranking and categorization surveys, enabling you to measure the extent to which participants agree and disagree respectively – their judgmental variability (noisiness) – when ranking or rating alternatives of interest.

1000minds ranking surveys

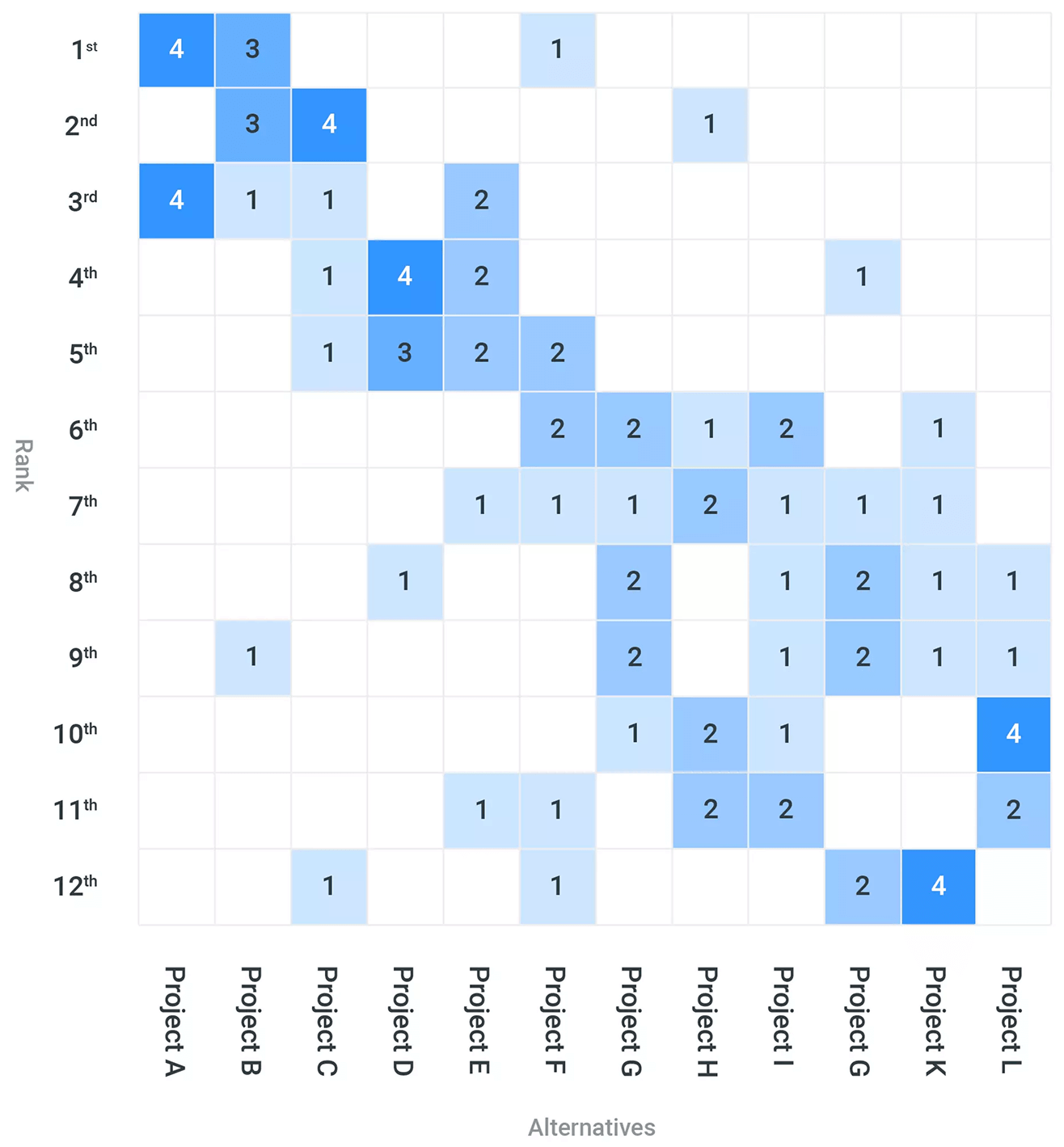

A ranking survey asks participants to use their intuition to rank various alternatives or case scenarios, often accompanied by short descriptions, based on their expert knowledge and preferences. Participants’ rankings are then compared to reveal the variability or “noisiness” present in their judgments. 1000minds produces a heatmap to visually communicate this variability, as illustrated by the example in Figure 2. For example, such a heatmap can be used to help convince decision-makers that a more valid and reliable decision-making process than relying on decision-makers’ intuition is needed. As the group reviews the ranking survey results, depending on the application, this is an opportunity for participants to discuss why they ranked the alternatives the way they did. For some applications, such a discussion will lead into defining the underlying criteria, and their levels of performance, for the ranking or prioritization decision being considered.

1000minds categorization surveys

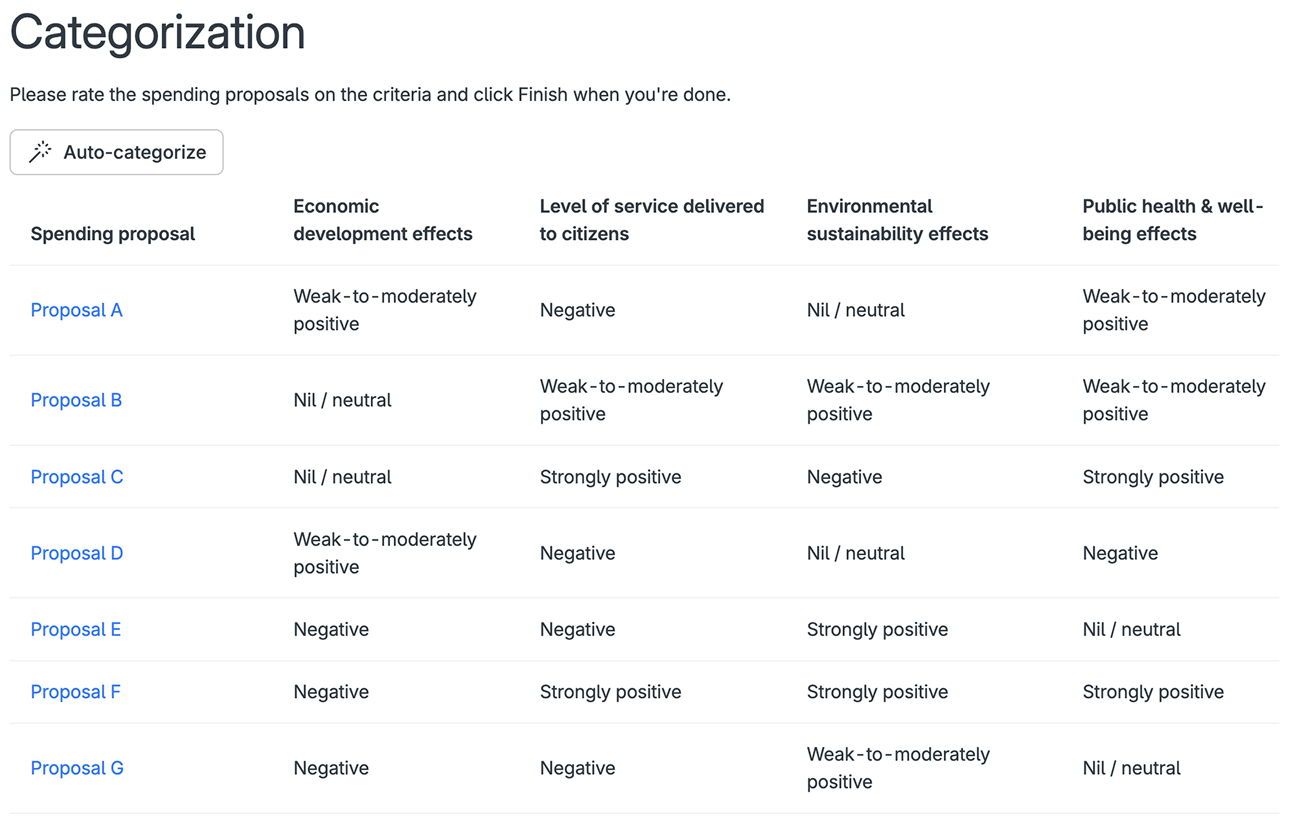

A second type of noise audit for measuring the variability in Delphi panelists’ judgments is to run a categorization survey.

This survey asks participants to categorize or rate various alternatives or case scenarios, often accompanied by short descriptions, on pre-specified criteria or attributes, again based on participants’ expert knowledge and preferences.

As illustrated in Figure 3, for example, each participant was asked to rate seven proposals (A–G) on each of the criteria shown. These ratings can be gathered anonymously and may be followed by a discussion to build consensus on the alternatives’ ratings. Alternatively, you can simply use averages to determine the final ratings – for use at other stages of a Delphi process.

1000minds offers additional tools that support later stages of the Delphi method by similarly sending out surveys and highlighting areas of agreement and disagreement among participants. These results are typically presented at in-person or online workshops to encourage discussion and build consensus.

In applications involving ranking or choosing alternatives, after a discussion of your ranking-survey results you will be in a position to specify the criteria and their levels for the decision at hand. If you have also rated the alternatives using a categorization survey, then you will be ready to determine the relative importance or weights of the criteria to be used to rank the alternatives.

These criterion weights were often created in the past by the decision-makers directly assigning point values representing the relative importance of the criteria — known as the “direct rating” method. However, because this method involves, in essence, the arbitrary assignment of weights, it is widely criticized for being invalid and unreliable, resulting in poor decisions.

This is where 1000minds’ PAPRIKA weighting method, a type of pairwise comparisons method, comes in, as explained next.

Group decision-making using pairwise comparisons

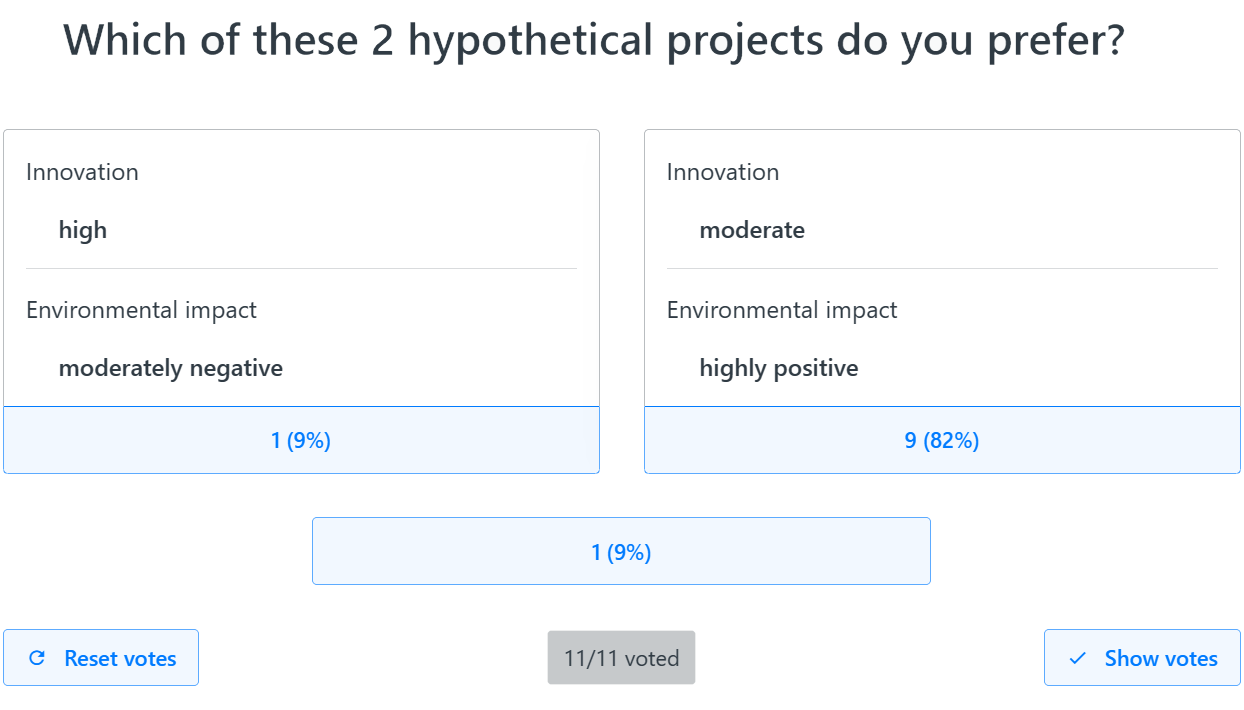

1000minds elicits Delphi panelists’ preferences about the relative importance of decision criteria via a series of trade-off questions. Each question involves a trade-off between two hypothetical alternatives defined on two criteria at a time.

From each participant’s answers to the questions, 1000minds calculates the weights on the criteria, representing their relative importance.

An interactive example of these trade-off questions appears in Figure 4.

By asking participants to answer these simplest of possible trade-off questions – choosing between two alternatives defined on just two criteria at a time – the decision-making process is made as easy as possible.

Therefore, participants can have more confidence in their answers, which increases the validity and reliability of the resulting decision weights – all in an inclusive and collaborative fashion consistent with Delphi.

Participants can answer their trade-off questions in two ways:

- individually via a preferences survey

- together as a group by voting on each question and achieving consensus

1000minds preferences surveys

A preferences survey involves each participant answering the trade-off questions in their own time, which can be done anonymously.

As well as average weights for the group surveyed, the survey’s results include individual participants’ weights. This makes preferences surveys a powerful tool for highlighting areas of agreement and disagreement for discussion. Preferences surveys can also be used as a warm-up exercise for the trade-off questions in a voting exercise.

1000minds voting

1000minds’ voting mechanism is another approach for eliciting participants’ preferences via their answers to the trade-off questions and achieving consensus. The questions presented are the same, but this time participants vote on each question together as a group, as part of an online or in-person workshop.

The method works like this:

- Panelists vote anonymously on individual trade-off questions.

- After each question, the facilitator reveals the votes on that question, which shows how much agreement or disagreement there is on the specific trade-off.

- Panelists discuss why they voted the way they did, thereby building understanding and encouraging consensus.

- After sufficient discussion, the votes are reset and participants are prompted to vote again.

- When sufficient agreement has been reached, the group moves on to the next question, and the process repeats.

In this way, 1000minds voting resembles a fast-paced Delphi process with its rounds of feedback and consensus, and leads to decisions based on mutual understanding that everyone can stand behind. For most participants, voting is engaging and fun, as well as great for team-building.

Automated feedback and analysis

One of the hardest jobs for a Delphi facilitator is turning round-by-round responses into meaningful feedback to participants for the next Delphi round. 1000minds helps solve this issue by automatically analyzing survey results in real time.

As you’re collecting responses using 1000minds, the software calculates statistics (means, medians, rank orders, frequencies, etc) and generates a variety of charts to help facilitators interpret and communicate results.

These statistics can easily be shared with a panel through reports or workshops, which saves time and improves clarity by providing structured feedback to participants. Because the data is in one place, you have documentation of all the responses and how the decision-making is evolving.

Decision model and results synthesis

At the end of the Delphi process you will have built a decision model – e.g. a weighted list of criteria and levels, a ranked list of alternatives, etc.

1000minds enables you to build such a model using the afore-mentioned surveys (ranking, preferences, categorization), voting and associated processes – complete with charts and other documentation and analyses. Some of these outputs also help you incorporate sensitivity and uncertainty analyses.

In addition, some applications involve allocating budgets or other scarce resources across (competing) alternatives, typically with the objective of maximizing “value for money” and efficiency. 1000minds includes a powerful resource-allocation framework.

One advantage of using 1000minds for Delphi is that 1000minds lets you use the same decision model for repeated decision-making applications, thereby saving time and effort for future decisions.

The Delphi consensus achieved is stored as a decision model where new alternatives can be entered and automatically scored using the agreed criteria and weights. The model can be easily revised if needed. The outcome of your Delphi process is not just a one-off result but an enduring system or tool for ongoing decision-making.

Applying the Delphi method to rank or choose alternatives

The Delphi process is a general consensus-based decision framework intended for use with many types of application.

A common type of application used in a wide variety of areas involves ranking, prioritizing or choosing alternatives based on considering multiple criteria – commonly known as multi-criteria decision analysis (MCDA).

A typical process for this kind of decision-making is outlined in Figure 6, which has three main stages, all of which are amenable to the Delphi method:

- Problem structuring: The process begins with clearly defining the problem or issue being addressed and assembling the process’ key components.

- Model building: A decision model, or evaluation framework, comprising alternatives, criteria and values is created.

- Challenging thinking: The model’s output is analyzed, extended and stress-tested, with the ultimate goal of developing an action plan for implementation.

A key feature of the process outlined in Figure 6 is its iterative nature with feedback loops at each stage. Ideas and inputs can be revisited and refined as the process moves along – and repeatedly so – instead of necessarily maintaining a linear, uni-directional path.

The Delphi method fits in with each stage of the decision-making process, as explained below.

1. Problem structuring

After identifying the decision problem or issue to be addressed, the key components of the decision-making process need to be uncovered:

- What are the decision-makers’ underlying goals and values?

- Who are the relevant stakeholders for the decision?

- What associated uncertainties and constraints exist?

- What, if any, are the alternatives to be evaluated?

- What might the external environment look like?

The Delphi method can be used for uncovering these key components, which can be further refined as the decision-making process evolves.

As you embark on your first Delphi exercise – and as you plan for future ones – you should consider:

- How many Delphi rounds will be conducted?

- How long should the group spend on each round?

- Should panelist discussions be conducted in person or online (or both)?

- What level of agreement or consensus is sufficient before moving on to the next Delphi round?

Also, in support of the process overall, it’s useful at this first stage to undertake (or commission) preliminary research, gathering background information and related work. This information should be made available to participants to help inform their thinking at the various stages, as needed.

2. Model building

After having identified the key components of your decision problem, the next stage is to build a model for decision-making. Building a model comprises these three main elements:

- Defining the criteria for the decision at hand

- Eliciting values, or weights, representing the relative importance of the criteria

- Specifying the alternatives of interest and evaluating them on the criteria to arrive at a ranking of the alternatives

This model-building can be done using specialized group decision-making software such as 1000minds and based on a Delphi process. Depending on the application, potentially different groups can participate at different points, selected according to their expertise and availability.

For example, a Delphi survey could ask panelists to identify the criteria they would use to evaluate options, and collectively agree on a final set, with levels on each criterion.

And then the panelists – potentially, a subset or a different group – can be surveyed or work together collaboratively to determine the relative importance, or weights, of the criteria.

Subsequent rounds of Delphi can be used for gathering panelists’ evaluations of how alternatives perform on the criteria – for example, how closely aligned are the various options with the company’s overall strategic direction?

By the end of this stage, you will have built a decision-making model. This model can be applied and further refined at the next stage, “challenging thinking”.

3. Challenging thinking

Based on the decision model at the previous stage, the facilitator (or research team) highlights key findings and takeaways relevant to the decision problem or issue identified at the start of the decision-making process.

Various charts (such as those available in 1000minds) can be used to visually communicate the model’s results to participants as clearly as possible for them to discuss.

For example, if the Delphi process at the previous stage revealed significant variability in the weights on the criteria across the panelists (as is often the case), this heterogeneity in people’s preference, as well as average weights, can be illustrated in a radar chart.

The robustness of the model’s results can be tested by checking their sensitivity to alternative plausible formulations of the model’s criteria, weights and alternatives. Where appropriate, new alternatives can be introduced to the model.

Via a survey, the Delphi method can be used to elicit any additional relevant information from participants, which can then be synthesized with the model’s results to yield further possible insights.

The results and insights from the model should be compared with decision-makers’ intuitions, with the ultimate objective of challenging their thinking and helping develop an action plan (with the possibility of future improvements).

Example of a Delphi-based decision-making process supported by 1000minds

The full suite of 1000minds surveys and tools referred to earlier are available to support a Delphi-based process to create a consensus-based priority ranking of alternatives presented in Figure 7.

A version of this process for creating a consensus-based tool or system for prioritizing patients for access to health care is also available.

The 10 steps in the process below can be adapted, including being pared down.

For example, if you are in a hurry, steps 2-4 can be dispensed with, i.e. assuming you already know the criteria you want to use – but do you really?

Preliminary steps for identifying and specifying your decision criteria (and levels):

Steps for determining the weights on the criteria, representing their relative importance:

Validating and applying your criteria and weights and the resulting ranking of alternatives:

Conclusion

The Delphi method is a powerful tool for consensus-based decision-making, especially when hard data and evidence is scarce.

Whether you are an academic researcher designing a Delphi process for the first time, a business leader weighing strategic options with your team or a government policy-maker seeking consensus, the Delphi method helps you make decisions that are inclusive and transparent.

And with the principles outlined in this article, combined with the tools available for implementing the Delphi method, you are well-equipped to leverage Delphi for valid and reliable group decision-making.

Though the Delphi process can be complex and time-consuming on its own, it can be easily implemented using 1000minds surveys and group decision-making tools.

1000minds makes it easy to execute Delphi’s steps – generating ideas, eliciting participants’ preferences (individually and collectively), communicating results and building consensus.

If you’d like to speak with an expert about how 1000minds can be used for your next Delphi study, simply book a free demo with us today.